Juan Enriquez at SFI

/"Are Humans Optimal?"

- Historically on the planet there have been several hominins existing at a time. Right now humans are the only species of hominins.

- Typically when there is only one species, that is a sign of impending extinction.

- The difference between humans and Neanderthals is less than 0.004% on the genomic level.

- Differences are in sperm, testes, smell and skin

- There was an experiment in Russia to try and breed domesticated wild foxes. They took only the friendliest foxes and bred them amongst each other. Within a few generations they got tame and were worthy of being pets (more on that here).

- We can now sequence and acquire genetic data 3x quicker than our capacity to store it. We’ve sequenced about 10,000 human genes today. We will start to find more differences soon.

- One finding is with the ACE gene. No one has climbed Everest without it.

- Another is the 577R gene. All male Olympians in power sports have it, others don’t.

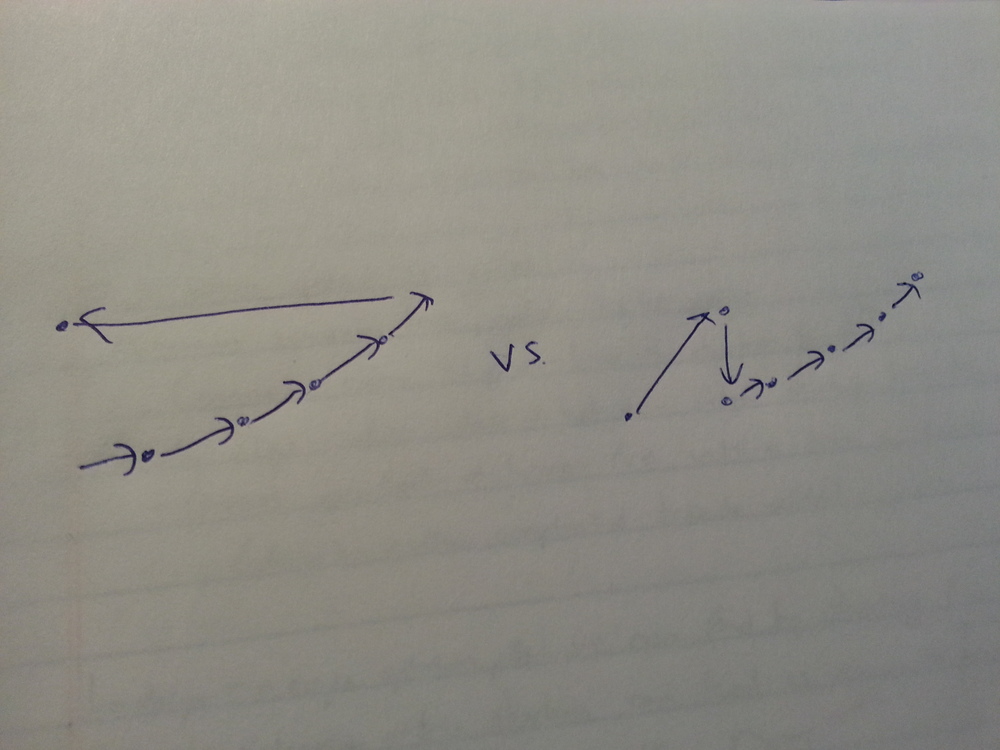

- Life is imperfectly transmitted code.

- We can now build just mouth teeth (or human teeth with stem cells from a lost tooth). We can build an ear, a bladder, a trachea.

- Homo evolutis:

- For better or worse, we’re beginning to control our own evolution

- This is “unnatural selection or actual intelligent design”

- We have to live with the consequences, whether they be good or bad.

- So far, using these technologies we have taken ourselves out of the food chain and doubled lifespans. In this respect, it’s been good for us so far.

- While we conventionally speak about how great the digital revolution has been, the revolution in life sciences is and will be magnitudes greater.

- Co-founded Synthetic Genomics with J. Craig Venter (One of the first to have sequenced the human genome)

- Synthetic Genomics has developed a cell built that can operate like a computer system. It’s a cell that executes life code.

- It may be possible to reprogram a species to become another species.

- It’s like a software that makes its own hardware.

- Algae is the best scalable production systems for energy development in a constrained world.

- “We are evolving ourselves.” In science, “there are decades when nothing happens and weeks when everything happens.” (a questioner in the audience pointed out this quote comes from Lenin).

- Q: “Do we have secular stagnation?”

- Enriquez: A resounding no. Today there are people who are smart, creative, with scale and ambition. Lots of great things are happening in the sciences. We are as advanced as ever, and increasingly so. 1 problem is that with technology, our interest in sex different than it used to be, and sex is not keeping the developed world population moving upwards fast enough.